by Michael Brooks, New Scientist, March 25, 2009

IT IS midnight on 22 September 2012 and the skies above Manhattan are filled with a flickering curtain of colourful light. Few New Yorkers have seen the aurora this far south but their fascination is short-lived. Within a few seconds, electric bulbs dim and flicker, then become unusually bright for a fleeting moment. Then all the lights in the state go out. Within 90 seconds, the entire eastern half of the US is without power.

A year later and millions of Americans are dead and the nation's infrastructure lies in tatters. The World Bank declares America a developing nation. Europe, Scandinavia, China and Japan are also struggling to recover from the same fateful event -- a violent storm, 150 million kilometres away on the surface of the sun.

It sounds ridiculous. Surely the sun couldn't create so profound a disaster on Earth. Yet an extraordinary report funded by NASA and issued by the US National Academy of Sciences (NAS) in January this year claims it could do just that.

Over the last few decades, western civilisations have busily sown the seeds of their own destruction. Our modern way of life, with its reliance on technology, has unwittingly exposed us to an extraordinary danger: plasma balls spewed from the surface of the sun could wipe out our power grids, with catastrophic consequences.

The projections of just how catastrophic make chilling reading. "We're moving closer and closer to the edge of a possible disaster," says Daniel Baker, a space weather expert based at the University of Colorado in Boulder, and chair of the NAS committee responsible for the report.

It is hard to conceive of the sun wiping out a large amount of our hard-earned progress. Nevertheless, it is possible. The surface of the sun is a roiling mass of plasma -- charged high-energy particles -- some of which escape the surface and travel through space as the solar wind. From time to time, that wind carries a billion-tonne glob of plasma, a fireball known as a coronal mass ejection (see "When hell comes to Earth"). If one should hit the Earth's magnetic shield, the result could be truly devastating.

The incursion of the plasma into our atmosphere causes rapid changes in the configuration of Earth's magnetic field which, in turn, induce currents in the long wires of the power grids. The grids were not built to handle this sort of direct current electricity. The greatest danger is at the step-up and step-down transformers used to convert power from its transport voltage to domestically useful voltage. The increased DC current creates strong magnetic fields that saturate a transformer's magnetic core. The result is runaway current in the transformer's copper wiring, which rapidly heats up and melts. This is exactly what happened in the Canadian province of Quebec in March 1989, and six million people spent 9 hours without electricity. But things could get much, much worse than that.

Worse than Katrina

The most serious space weather event in history happened in 1859. It is known as the Carrington event, after the British amateur astronomer Richard Carrington, who was the first to note its cause: "two patches of intensely bright and white light" emanating from a large group of sunspots. The Carrington event comprised eight days of severe space weather.

There were eyewitness accounts of stunning auroras, even at equatorial latitudes. The world's telegraph networks experienced severe disruptions, and Victorian magnetometers were driven off the scale.

Though a solar outburst could conceivably be more powerful, "we haven't found an example of anything worse than a Carrington event," says James Green, head of NASA's planetary division and an expert on the events of 1859. "From a scientific perspective, that would be the one that we'd want to survive." However, the prognosis from the NAS analysis is that, thanks to our technological prowess, many of us may not.

There are two problems to face. The first is the modern electricity grid, which is designed to operate at ever higher voltages over ever larger areas. Though this provides a more efficient way to run the electricity networks, minimising power losses and wastage through overproduction, it has made them much more vulnerable to space weather. The high-power grids act as particularly efficient antennas, channelling enormous direct currents into the power transformers.

The second problem is the grid's interdependence with the systems that support our lives: water and sewage treatment, supermarket delivery infrastructures, power station controls, financial markets and many others all rely on electricity. Put the two together, and it is clear that a repeat of the Carrington event could produce a catastrophe the likes of which the world has never seen. "It's just the opposite of how we usually think of natural disasters," says John Kappenman, a power industry analyst with the Metatech Corporation of Goleta, California, and an advisor to the NAS committee that produced the report. "Usually the less developed regions of the world are most vulnerable, not the highly sophisticated technological regions."

According to the NAS report, a severe space weather event in the US could induce ground currents that would knock out 300 key transformers within about 90 seconds, cutting off the power for more than 130 million people (see map). From that moment, the clock is ticking for America.

First to go -- immediately for some people -- is drinkable water. Anyone living in a high-rise apartment, where water has to be pumped to reach them, would be cut off straight away. For the rest, drinking water will still come through the taps for maybe half a day. With no electricity to pump water from reservoirs, there is no more after that.

There is simply no electrically powered transport: no trains, underground or overground. Our just-in-time culture for delivery networks may represent the pinnacle of efficiency, but it means that supermarket shelves would empty very quickly -- delivery trucks could only keep running until their tanks ran out of fuel, and there is no electricity to pump any more from the underground tanks at filling stations.

Back-up generators would run at pivotal sites -- but only until their fuel ran out. For hospitals, that would mean about 72 hours of running a bare-bones, essential care only, service. After that, no more modern healthcare.

72 hours of healthcare remaining

The truly shocking finding is that this whole situation would not improve for months, maybe years: melted transformer hubs cannot be repaired, only replaced. "From the surveys I've done, you might have a few spare transformers around, but installing a new one takes a well-trained crew a week or more," says Kappenman. "A major electrical utility might have one suitably trained crew, maybe two."

Within a month, then, the handful of spare transformers would be used up. The rest will have to be built to order, something that can take up to 12 months.

Even when some systems are capable of receiving power again, there is no guarantee there will be any to deliver. Almost all natural gas and fuel pipelines require electricity to operate. Coal-fired power stations usually keep reserves to last 30 days, but with no transport systems running to bring more fuel, there will be no electricity in the second month.

Nuclear power stations wouldn't fare much better. They are programmed to shut down in the event of serious grid problems and are not allowed to restart until the power grid is up and running.

With no power for heating, cooling or refrigeration systems, people could begin to die within days. There is immediate danger for those who rely on medication. Lose power to New Jersey, for instance, and you have lost a major centre of production of pharmaceuticals for the entire US. Perishable medications such as insulin will soon be in short supply. "In the US alone there are a million people with diabetes," Kappenman says. "Shut down production, distribution and storage and you put all those lives at risk in very short order."

Help is not coming any time soon, either. If it is dark from the eastern seaboard to Chicago, some affected areas are hundreds, maybe thousands of miles away from anyone who might help. And those willing to help are likely to be ill-equipped to deal with the sheer scale of the disaster. "If a Carrington event happened now, it would be like a hurricane Katrina, but 10 times worse," says Paul Kintner, a plasma physicist at Cornell University in Ithaca, New York.

In reality, it would be much worse than that. Hurricane Katrina's societal and economic impact has been measured at $81 billion to $125 billion. According to the NAS report, the impact of what it terms a "severe geomagnetic storm scenario" could be as high as $2 trillion. And that's just the first year after the storm. The NAS puts the recovery time at four to 10 years. It is questionable whether the US would ever bounce back.

"I don't think the NAS report is scaremongering," says Mike Hapgood, who chairs the European Space Agency's space weather team. Green agrees. "Scientists are conservative by nature and this group is really thoughtful," he says. "This is a fair and balanced report."

Such nightmare scenarios are not restricted to North America. High latitude nations such as Sweden and Norway have been aware for a while that, while regular views of the aurora are pretty, they are also reminders of an ever-present threat to their electricity grids. However, the trend towards installing extremely high voltage grids means that lower latitude countries are also at risk. For example, China is on the way to implementing a 1000-kilovolt electrical grid, twice the voltage of the US grid. This would be a superb conduit for space weather-induced disaster because the grid's efficiency to act as an antenna rises as the voltage between the grid and the ground increases. "China is going to discover at some point that they have a problem," Kappenman says.

Neither is Europe sufficiently prepared. Responsibility for dealing with space weather issues is "very fragmented" in Europe, says Hapgood.

Europe's electricity grids, on the other hand, are highly interconnected and extremely vulnerable to cascading failures. In 2006, the routine switch-off of a small part of Germany's grid -- to let a ship pass safely under high-voltage cables -- caused a cascade power failure across western Europe. In France alone, five million people were left without electricity for two hours. "These systems are so complicated we don't fully understand the effects of twiddling at one place," Hapgood says. "Most of the time it's alright, but occasionally it will get you."

The good news is that, given enough warning, the utility companies can take precautions, such as adjusting voltages and loads, and restricting transfers of energy so that sudden spikes in current don't cause cascade failures. There is still more bad news, however. Our early warning system is becoming more unreliable by the day.

By far the most important indicator of incoming space weather is NASA's Advanced Composition Explorer (ACE). The probe, launched in 1997, has a solar orbit that keeps it directly between the sun and Earth. Its uninterrupted view of the sun means it gives us continuous reports on the direction and velocity of the solar wind and other streams of charged particles that flow past its sensors. ACE can provide between 15 and 45 minutes' warning of any incoming geomagnetic storms. The power companies need about 15 minutes to prepare their systems for a critical event, so that would seem passable.

However, observations of the sun and magnetometer readings during the Carrington event shows that the coronal mass ejection was travelling so fast it took less than 15 minutes to get from where ACE is positioned to Earth. "It arrived faster than we can do anything," Hapgood says.

There is another problem. ACE is 11 years old, and operating well beyond its planned lifespan. The onboard detectors are not as sensitive as they used to be, and there is no telling when they will finally give up the ghost. Furthermore, its sensors become saturated in the event of a really powerful solar flare. "It was built to look at average conditions rather than extremes," Baker says.

He was part of a space weather commission that three years ago warned about the problems of relying on ACE. "It's been on my mind for a long time," he says. "To not have a spare, or a strategy to replace it if and when it should fail, is rather foolish."

There is no replacement for ACE due any time soon. Other solar observation satellites, such as the Solar and Heliospheric Observatory (SOHO) can provide some warning, but with less detailed information and -- crucially -- much later. "It's quite hard to assess what the impact of losing ACE will be," Hapgood says. "We will largely lose the early warning capability."

The world will, most probably, yawn at the prospect of a devastating solar storm until it happens. Kintner says his students show a "deep indifference" when he lectures on the impact of space weather. But if policy-makers show a similar indifference in the face of the latest NAS report, it could cost tens of millions of lives, Kappenman reckons. "It could conceivably be the worst natural disaster possible," he says.

The report outlines the worst case scenario for the US. The "perfect storm" is most likely on a spring or autumn night in a year of heightened solar activity -- something like 2012. Around the equinoxes, the orientation of the Earth's field to the sun makes us particularly vulnerable to a plasma strike.

What's more, at these times of year, electricity demand is relatively low because no one needs too much heating or air conditioning. With only a handful of the US grid's power stations running, the system relies on computer algorithms shunting large amounts of power around the grid and this leaves the network highly vulnerable to sudden spikes.

If ACE has failed by then, or a plasma ball flies at us too fast for any warning from ACE to reach us, the consequences could be staggering. "A really large storm could be a planetary disaster," Kappenman says.

So what should be done? No one knows yet -- the report is meant to spark that conversation. Baker is worried, though, that the odds are stacked against that conversation really getting started. As the NAS report notes, it is terribly difficult to inspire people to prepare for a potential crisis that has never happened before and may not happen for decades to come. "It takes a lot of effort to educate policy-makers, and that is especially true with these low-frequency events," he says.

We should learn the lessons of hurricane Katrina, though, and realise that "unlikely" doesn't mean "won't happen." Especially when the stakes are so high. The fact is, it could come in the next three or four years -- and with devastating effects. "The Carrington event happened during a mediocre, ho-hum solar cycle," Kintner says. "It came out of nowhere, so we just don't know when something like that is going to happen again."

Link to article: http://www.newscientist.com/article/mg20127001.300-space-storm-alert-90-seconds-from-catastrophe.html?full=true

Arctic Sea Ice News RSS feed for automatic notification of analysis updates. Updates are also available via Twitter.

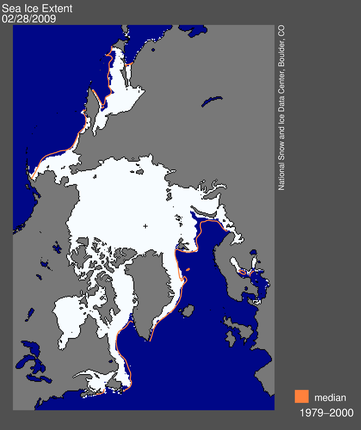

Arctic Sea Ice News RSS feed for automatic notification of analysis updates. Updates are also available via Twitter. Figure 1. Arctic sea ice extent for February 28, 2009, the date of the annual maximum, was 15.14 million square kilometers (5.85 million square miles). The orange line shows the 1979 to 2000 median extent for that day. The black cross indicates the geographic North Pole. Sea Ice Index data. About the data. —Credit: National Snow and Ice Data Center

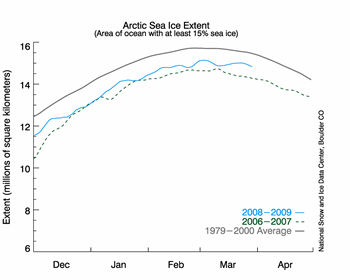

Figure 1. Arctic sea ice extent for February 28, 2009, the date of the annual maximum, was 15.14 million square kilometers (5.85 million square miles). The orange line shows the 1979 to 2000 median extent for that day. The black cross indicates the geographic North Pole. Sea Ice Index data. About the data. —Credit: National Snow and Ice Data Center Figure 2. The graph above shows daily sea ice extent. The solid blue line indicates 2008 to 2009; the dashed green line shows 2006 to 2007 (the record-low summer minimum occurred in 2007); and the solid gray line indicates average extent from 1979 to 2000. Sea Ice Index data. —Credit: National Snow and Ice Data Center

Figure 2. The graph above shows daily sea ice extent. The solid blue line indicates 2008 to 2009; the dashed green line shows 2006 to 2007 (the record-low summer minimum occurred in 2007); and the solid gray line indicates average extent from 1979 to 2000. Sea Ice Index data. —Credit: National Snow and Ice Data Center

Present address: Department of Mar

Present address: Department of Mar